Artificial Intelligence (AI) in video games has come a long way from the simple patterns of 1980s arcade enemies to the complex, adaptive foes and procedurally generated worlds of today. Over the decades, game AI has improved dramatically in enemy behavior, NPC (non-player character) design, procedural content generation, and adaptive learning, enhancing realism, challenge, and player immersion. This article traces the evolution of game AI through landmark titles – from the ghostly pursuers of Pac-Man to the cunning soldiers of Half-Life and F.E.A.R., the dynamic allies and enemies of The Last of Us, and modern games driven by advanced AI. We’ll explore key breakthroughs in game AI, supported by developer insights, research, and even industry patents, to understand how smarter games have changed the way we play.

Early Days: Patterns and Personalities in the Arcade Era

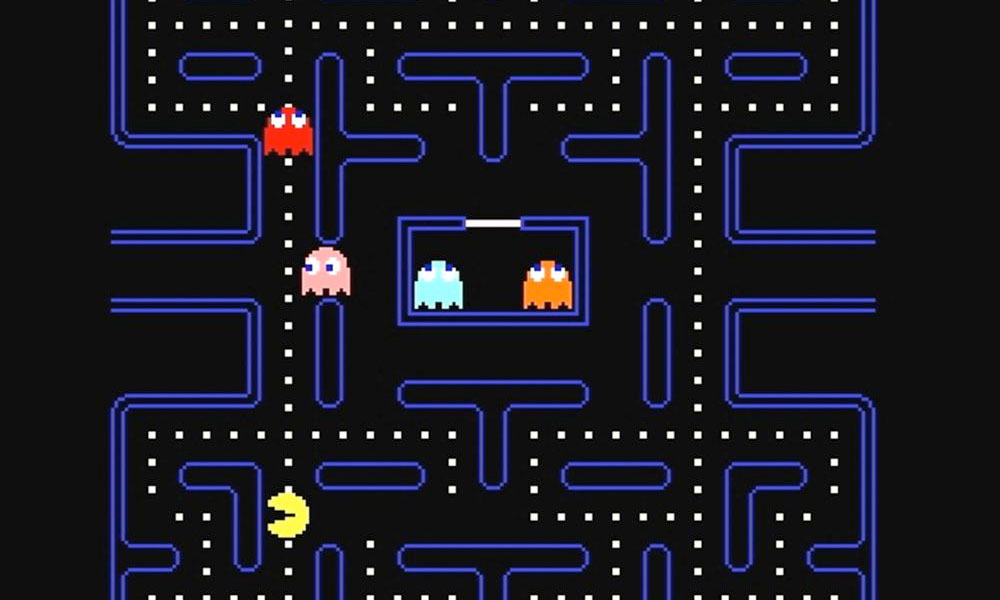

Pac-Man (1980) offers one of the earliest examples of game AI shaping gameplay. The iconic arcade game’s four ghosts – Blinky, Pinky, Inky, and Clyde – were programmed with distinct “personalities” and movement algorithms that made them more than random adversaries. Each ghost behaved differently: Blinky (the red ghost) directly chased the player, while Pinky and Inky attempted to ambush by moving into positions ahead of Pac-Man’s path, and Clyde would periodically stray and retreat.

These simple yet clever algorithms gave the ghosts the appearance of strategy, forcing players to learn and adapt to each ghost’s behavior. Early game AI like this was entirely deterministic – the ghosts followed pre-set rules – but it added depth beyond the purely predictable or completely random movements seen in many 1970s games. In Pac-Man, the blend of pattern and personality made the gameplay challenging and fun, laying the groundwork for AI as a design tool to create engaging enemies.

In the same era, most game “AI” was actually a set of scripted patterns or state machines. Enemies in Space Invaders (1978) marched in formation, and the Goombas in Super Mario Bros. (1985) simply walked forward until something stopped them. These behaviors were hard-coded and did not adapt to the player – once you learned the pattern, you could predict the outcome. Yet, even within these limits, developers found ways to give the illusion of intelligence. For example, the arcade hit Ms. Pac-Man (1982) introduced slight randomness to ghost movements to prevent players from memorizing perfect patterns, making the challenge feel more dynamic. Such early techniques showed that even basic AI routines could significantly impact gameplay. They also highlighted a guiding principle of game AI in decades to come: AI doesn’t have to be complex to be effective, it just has to create interesting, believable challenges for the player.

The 1990s: Scripting Smarter Enemies and Emergent Behavior

As computing power grew in the 1990s, game developers began pushing beyond simple patterns to create enemies that seemed to think. Finite state machines and decision trees became common techniques for enemy AI, allowing NPCs to switch between behaviors (states) based on game situations. Early first-person shooters initially featured fairly static AI – for example, the demons in Doom (1993) would charge straight at the player or follow basic triggers. But by the late ‘90s, games like Half-Life (1998) stunned players with enemies that felt far more intelligent and tactical.

Half-Life revolutionized FPS (first-person shooter) AI with its military opponents, the HECU Marines. These soldiers behaved in coordinated, realistic ways: they took cover and shot from protected positions, tossed grenades to flush the player out, and would even attempt flanking maneuvers if the player stayed in one spot too long. Under the hood, the Marines were governed by a state-based AI system – essentially an organized set of behaviors and triggers – but to players, it was a revelation. Enemies shouted orders to each other, retreated when injured, and attacked from multiple angles, making each battle feel dynamic. Valve’s designers essentially scripted group tactics into the AI, so the Marines appeared to cooperate as a squad. This was a huge leap from the straightforward foes of earlier shooters, and it set the bar for “smart” AI in action games. As one analysis notes, in Half-Life the NPC soldiers could switch between states like searching, attacking, and retreating based on the player’s actions and their environment, creating more challenging encounters.

Half-Life’s influence on AI design was felt widely. Other late ‘90s games also experimented with more advanced AI: the stealth game Thief: The Dark Project (1998) had guards that could hear noises and search for the player when alerted, and GoldenEye 007 (1997) featured enemies that reacted to alarms and could be disarmed. While still relatively simple by modern standards, these AI systems introduced the idea of enemies responding to the player’s behavior in real time, rather than following a fixed script no matter what. Developers began to view AI as an integral part of game design – not just an obstacle for the player, but an element that could provide emergent gameplay, where complex scenarios arise from the interaction of simple rules. Looking back, games like Half-Life demonstrated that well-crafted AI could dramatically improve immersion, making players feel like they were up against thinking, planning adversaries rather than lines of code.

(Image: A screenshot from Half-Life (1998), where a wall scrawled with a threatening message “Yore Dead Freeman” is left by the enemy soldiers. It’s a darkly humorous detail – the AI-controlled Marines were formidable in combat even if their spelling wasn’t perfect.)

The 2000s: Tactical AI, Planning, and Procedural Worlds

By the mid-2000s, game AI techniques had grown more sophisticated and diverse. Developers were no longer limited to static state machines; they experimented with AI planning systems, learning algorithms, and large-scale procedural generation of game worlds. Two parallel trends defined this era: smarter enemy tactics in moment-to-moment gameplay, and algorithm-driven content creation that could make game worlds bigger and more varied.

On the tactical front, F.E.A.R. (First Encounter Assault Recon, 2005) became legendary for the intelligence of its enemy soldiers. F.E.A.R.’s opponents exhibit coordination and adaptability that can still impress players years later. They flank the player, vault through windows or knock over tables for cover, lay down suppressive fire, and retreat or regroup when under pressure.

Monolith Productions achieved this by using a Goal-Oriented Action Planning (GOAP) system for the AI – essentially an automated planning algorithm that lets NPCs decide on sequences of actions to meet a goal. Instead of following a simple loop (e.g. patrol then shoot), the F.E.A.R. enemies were given goals like “flush out the player” or “avoid dying” and a toolkit of actions, and the AI would plan a series of moves to achieve that. The result felt surprisingly realistic: the AI would react if you tried to hide or flank them in return. As one retrospective describes, F.E.A.R.’s clone soldiers remain a high point in game AI – “they’re fast moving, quick to react, utilize nearby cover, and cooperate to put pressure on the player”. Critics at the time universally praised the AI’s ability to surprise and challenge the player. This planning-based approach (inspired by academic AI research on automated planning) showed that game AI could go beyond scripted state machines to something more flexible. It influenced many games that followed and became one of several standard techniques in the AI programmer’s toolbox.

Around the same time, the Halo series (2001 onward) was also lauded for its enemy AI, particularly the way different alien types worked together and reacted to the player. Halo 2 (2004) popularized the use of behavior trees in game AI – a hierarchical decision-making system that is more modular and easier to manage for complex NPC behavior. Behavior trees quickly became a go-to AI architecture in many games, helping characters evaluate conditions and choose from many possible actions efficiently. Whether a game used behavior trees, planning systems, or traditional state machines, the 2000s saw AI that could handle more complex environments and player tactics. Enemies became better at using cover, team tactics, and environment navigation (aided by innovations like navigation meshes that allowed AI to understand 3D level geometry for pathfinding).

The 2000s also introduced procedural generation as a way of using AI-like algorithms to create game content dynamically. Instead of hand-crafting every level or character, developers wrote algorithms to assemble worlds on the fly, which can be considered another facet of artificial intelligence in games (focused on content creation rather than behavior). This wasn’t entirely new – Rogue (1980) and other “roguelike” games had used random dungeon generation decades earlier – but with more computing power, procedural generation hit the mainstream in bigger ways. Action RPGs like Diablo II (2000) used randomized map layouts and item drops to keep replayability high. The Elder Scrolls: Oblivion (2006) featured Radiant AI, a system to govern NPC daily routines, and procedural content for quests and dialogues (with mixed results, occasionally producing quirky behavior). By the end of the decade, entire worlds were being generated: the ambitious No Man’s Sky (2016) – taking procedural design to the extreme – would later offer 18 quintillion unique planets by generating planets, ecosystems, and creatures algorithmically rather than manually.

Procedural generation and advanced AI often went hand-in-hand. For example, Valve’s co-operative shooter Left 4 Dead (2008) introduced the “AI Director,” an AI system that monitored players’ stress and performance and dynamically adjusted the game, spawning enemies or creating quiet lulls to craft a dramatic horror experience. Unlike a fixed difficulty setting, the AI Director in Left 4 Dead would continuously tweak the pacing – if the players were low on health and ammo, it might ease up the hordes, or if players were breezing through, it would throw a surprise swarm at them. Gabe Newell of Valve described the result as “procedural narrative” – the game “sculpts a dramatically different experience that fits perfectly with what players are capable of” each run. In other words, the AI was adapting the challenge to keep players in that sweet spot of tension without ever knowing exactly what’s coming next. This form of adaptive difficulty was a leap forward in game AI’s role as a dungeon master orchestrating the overall experience, not just controlling individual enemies.

The late 2000s also saw experiments in learning AI within games, though mostly in niche titles. One notable example was Black & White (2001), where you raise a creature that learns from your actions – you could praise or punish your pet monster to teach it how to behave. That game’s AI creature used neural-network-like learning rules to gradually change its behavior, essentially learning from the player’s “training.” Similarly, the Creatures series (mid-1990s into 2000s) was built entirely around artificial life and neural networks, where virtual pets evolved and learned in a sandbox world. These were more experimental and not as common in big-budget games (since unpredictable learning AI can be risky for game design), but they demonstrated the potential of machine learning techniques in gaming long before modern breakthroughs.

The 2010s: Immersive Companions, Adaptive Enemies, and Human-Like AI

In the 2010s, as graphics and storytelling took huge strides, game AI advanced toward greater realism and narrative integration. Developers aimed to make AI characters – both friends and foes – behave in ways that felt believable in a story context, not just challenge the player mechanically. This era gave us sympathetic companion AI, enemies that react emotionally or tactically to the player’s actions, and large open worlds populated by countless AI-driven entities.

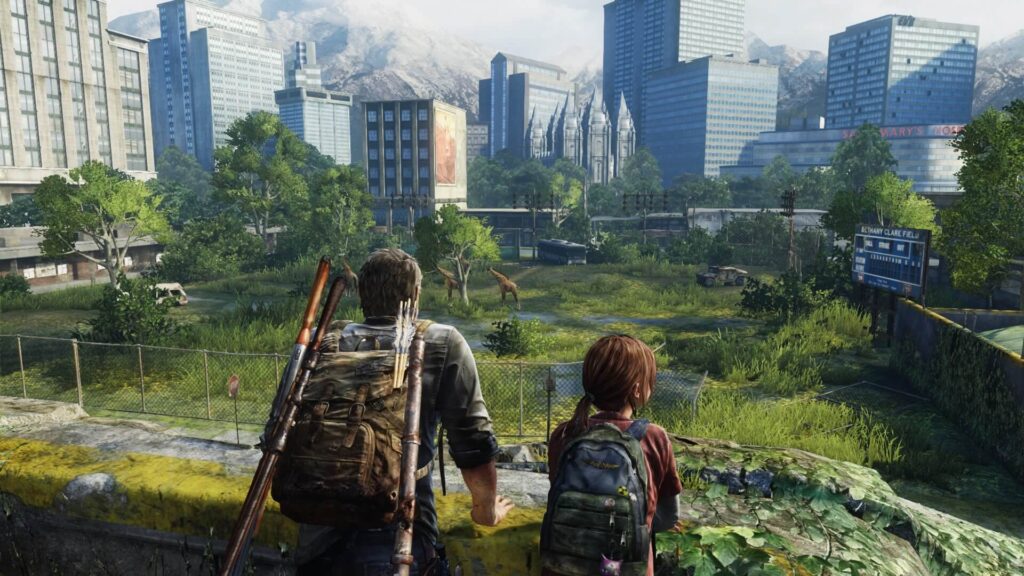

One standout is The Last of Us (2013), praised for both its enemy AI and its companion AI. In this post-apocalyptic action-adventure, the player (Joel) is often accompanied by Ellie, a teenage girl controlled by the AI. Creating a companion who is helpful and never a frustration was a major design goal for Naughty Dog. Ellie’s AI was carefully tuned so that she stays out of the player’s line of fire, hides intelligently during stealth, and occasionally aids the player by throwing bricks or stabbing an enemy who grabs Joel. These behaviors were all dynamic – not scripted cutscenes, but on-the-fly decisions Ellie’s AI makes to help at critical moments. “If you’re cornered, sometimes she’ll jump on their back and stab them. Sometimes she’ll pick up a brick and throw it,” described director Neil Druckmann, emphasizing the game’s systemic approach to companion AI.

The result is a companion who feels like a real partner. Players often commented that Ellie felt alive – she’d call out enemy positions, react to the environment, and never blow your cover in stealth. This was a huge leap from earlier games where escort NPCs might have infamously poor AI. Ellie’s believable behavior greatly enhanced immersion and emotional engagement.

Enemy AI in The Last of Us was equally considered. The human enemies (bandits and hunters) communicated with each other and changed tactics on the fly. They would shout if they spotted the player, flank around if you stayed pinned down, take cover and blind-fire, and crucially, they reacted when their allies were killed. For instance, if you stealthily took out one thug, another might discover the body and call out nervously, or if an enemy saw you take down his friend, he might curse and retreat to cover, changing his behavior now that the odds had shifted. Naughty Dog built what they called a “Balance of Power” AI system – enemies would become bolder or more cautious depending on their numbers and the player’s apparent strength. Druckmann noted they “wanted guys to communicate with each other, to be able to flank, to see when you dropped someone else and back away into cover,” which required rewriting their AI from scratch compared to their previous games.

This made combat encounters feel tense and realistic – enemies weren’t mindless monsters; they seemed to value their lives and react with a semblance of fear or anger. Small touches, like enemies sometimes hesitating or calling out “He’s out of ammo!” when the player’s gun clicks empty (a scripted yet dynamic response), contributed to the immersion.

Beyond combat, AI also began to serve storytelling in subtle ways. In The Last of Us Part II (2020), enemies call each other by name (e.g. “Alex is down!”) and even the guard dogs have names, which was intentionally done to make the player feel a pang of guilt – an AI-driven design choice to serve narrative emotion. While these specific examples are scripted lines rather than emergent AI behavior, they show how AI and narrative design started blending to create a more emotional player experience. Game AI was no longer just about outsmarting the player – it became about making the game’s world and characters feel convincing.

Another influential system of the 2010s was the Nemesis System introduced in Middle-earth: Shadow of Mordor (2014). The Nemesis System gave personality and memory to what would otherwise be disposable enemy characters. In this game, if a random orc soldier survived an encounter or managed to kill the player, that orc would be promoted into a named rival (a Nemesis) who could later reappear with upgraded abilities, remember past fights, and even taunt the player about their previous meeting. The game dynamically generated these Nemesis characters with unique strengths, weaknesses, and even visual marks (like scars from the last battle). Over time, a procedurally generated hierarchy of enemy captains and warlords emerged, each with their own AI agendas, battling each other and the player for supremacy. This led to personalized stories – every player had different arch-enemies created through gameplay. The feature was so novel that Warner Bros. patented the Nemesis System, covering the “hierarchy of procedurally-generated NPCs that interact with and remember the player’s actions” and evolve in response.

The popularity of the Nemesis concept showed how AI systems can drive emergent narrative: the drama of rivalries and revenge in Shadow of Mordor wasn’t hand-written; it arose from the interplay of the game’s AI mechanics and the player’s actions.

In the realm of open-world games, AI made strides to simulate living worlds. The Elder Scrolls V: Skyrim (2011) and Fallout 4 (2015) populated their worlds with dozens of NPCs following daily schedules, powered by improved Radiant AI. Urban crowd simulations in games like Assassin’s Creed and Grand Theft Auto V created busy streets with AI pedestrians and drivers following traffic rules, reacting to the player’s crimes or fights. While these background AIs are simpler than combat AI, they greatly add to immersion – making the player feel like the world exists beyond their actions.

Finally, the 2010s saw some use of machine learning in game AI, though it was mostly behind the scenes. One notable example was Microsoft’s Forza Motorsport Drivatar system. Introduced in Forza 5 (2013), the Drivatar uses machine learning in the cloud to model human players’ driving styles, then creates AI racers that behave like real players. In effect, the AI “learns” from how players take corners or when they brake, and those learned behaviors show up in offline races, making AI opponents feel less like perfect robots and more like real drivers (with human-like tendencies to make mistakes or take risks). This was an early glimpse of utilizing big data and ML to enhance game AI.

The Future: Machine Learning, Neural Networks, and Beyond

Game AI continues to evolve, and the coming years promise even more exciting advancements. One major trend is the increasing use of machine learning and neural networks to create more advanced AI behaviors. Traditionally, game AI has been engineered by humans – designers and programmers explicitly code how an NPC behaves in various situations. But with modern AI techniques, we’re starting to see NPCs that can be trained rather than explicitly programmed, opening the door for more organic and adaptable behavior.

Reinforcement learning (RL), the technique behind breakthroughs like DeepMind’s AlphaGo, is one area being explored for games. Imagine an NPC enemy that isn’t scripted to dodge a grenade, but has learned through thousands of simulations that moving away from a grenade leads to better survival – that NPC might surprise players with novel tactics. In controlled environments, this is already happening: researchers have trained AI agents to play DOOM and Quake III Arena at superhuman levels. While these AI bots aren’t yet dropping into your average FPS game, the technology shows promise for creating enemies that can truly learn and adapt to players in real-time. Some fighting games and strategy games in development are experimenting with ML-driven opponents that analyze a player’s style and change their strategy accordingly (a literal adaptive learning AI). This could mean that in the future, single-player games might offer opponents that continue to challenge even highly skilled players, by observing and countering their favorite tactics – a sort of personalized difficulty that goes beyond simple “easy/medium/hard” settings.

Neural networks are also being used for procedural content generation in more sophisticated ways. Instead of using handcrafted algorithms, developers can train neural nets on level data or images and have them generate new content. There’s research on AI that can create new Super Mario levels by learning from existing ones, or AI that generates dialogue lines for NPCs based on vast datasets of text. With the rise of powerful natural language models (like GPT-3 and beyond), we might soon converse with game characters that can improvise dialogue convincingly, making RPGs and adventure games more interactive. Imagine a future open-world game where minor NPCs don’t all spout the same few canned lines, but can chat or answer questions with AI-generated lines that fit their personality and the game lore (within the safety confines set by writers). Some experimental projects and indie games are already dabbling in this, pointing to a future where AI could contribute to narrative and not just gameplay.

Another emerging application is using AI for animation and realism. Machine learning can power more realistic character animations – for example, systems that use neural networks to produce fluid character motion, adapting on the fly to terrain or player input (so that AI characters move and react in a very lifelike manner, without animators having to script every transition). We’ve seen early uses of this in games like Red Dead Redemption 2 (2018) with its Euphoria physics/animation engine making NPC reactions unique, and The Last of Us Part II which used motion matching (a data-driven AI technique) to seamlessly blend animations. As hardware improves, we could see fully AI-driven animation where characters physically respond to the world and events with natural body language.

It’s worth noting that as AI in games becomes more advanced, developers also have to ensure it remains fun. A hyper-intelligent, uncannily accurate enemy might be realistic – but not enjoyable if it defeats players mercilessly. Game AI often needs a touch of imperfection or predictability so that players can learn and improve. Thus, designers will likely continue balancing advanced AI capabilities with purposeful limits to give players a fair and interesting challenge. Future AI might even include adjustable personality sliders – for instance, an AI opponent could be tuned to be more aggressive or defensive based on the player’s preference, or even to role-play a certain style (imagine a strategy game where you could select the AI general’s “personality” – cautious strategist vs. rush-down attacker).

In terms of industry impact, we may also see more AI-driven game design tools. AI can assist developers by playtesting games (bots finding exploits or balancing issues), or by generating parts of games (like filling a huge open world with sensible terrain, or populating a village with NPCs with auto-generated backstories). This doesn’t replace human creativity, but augments it – allowing creators to focus on high-level design while AI handles the grunt work of content scaling.

Finally, the ethical and experiential aspect: as game AI becomes more human-like, our relationships to virtual characters could deepen. Players already felt emotional connections to characters like Ellie or their Nemesis rival or their Pokémon partners. With smarter AI, those connections might feel even more genuine. This raises new design questions: How do you create an AI character that players can truly bond with? How do you simulate emotions like fear, anger, or love in a way players recognize? These are frontiers that combine technology with artistry.

Conclusion

From the maze-dwelling ghosts of Pac-Man to the neural network-trained agents of tomorrow, the evolution of game AI has been a journey toward greater depth, realism, and interactivity. Each decade brought breakthroughs: the 1980s introduced the concept of distinct enemy behaviors to give games personality, the 1990s saw enemies start coordinating and reacting to players’ actions, the 2000s delivered tactical planning AI and the rise of procedurally generated worlds, and the 2010s made AI an integral part of storytelling and adaptive gameplay. These advances have fundamentally influenced game design – enabling new genres (stealth games, for instance, rely entirely on AI perception systems), improving replay value (through procedural generation and adaptive difficulty), and immersing players in worlds that feel alive and responsive.

The landmark games we’ve discussed acted as milestones in this evolution. Pac-Man taught us that even simple AI rules can create iconic gameplay. Half-Life proved that players love a game that fights back with clever tactics. F.E.A.R. showed that planning and coordination can make AI enemies as memorable as any human boss. The Last of Us set a high bar for AI that supports narrative and emotional engagement, making our digital companions and adversaries feel real enough to care about. And modern games like No Man’s Sky and Shadow of Mordor revealed that AI can also generate vast experiences and personal stories unique to each player.

As we look to the future, the gap between game AI and real-world AI research continues to narrow. Techniques from academic AI are finding their way into game development – from deep learning to cognitive modeling – opening possibilities for truly adaptive, learning, and perhaps even creative game AIs. Imagine a game where the NPCs improvise strategies or dialogue in ways the developers didn’t explicitly script, or a game that learns from the entire player community and updates its challenges dynamically. Such ideas are no longer science fiction; they are on the horizon.

Yet, the goal of game AI will always be slightly different from academic AI. It’s not about creating an AI that simply beats the player (what fun would that be?), but about creating an AI that delivers the best experience to the player. That might mean sometimes the AI “makes mistakes” on purpose, or choreographs a dramatic near-escape that wasn’t strictly necessary – because the aim is to entertain, not to win. The artistry in game AI is balancing intelligence with fallibility, unpredictability with fairness, challenge with fun.

The evolution of AI in video games is a testament to human creativity: we build machines to entertain us, to challenge us, and even to be our virtual friends and foes. As technology advances, one can only imagine the rich, reactive worlds and characters that await us. One thing is certain – game AI will continue to be at the heart of what makes games immersive and engaging. After all, a game world is only as alive as the AI that inhabits it. And as we’ve seen from the early maze chases to today’s adaptive epics, making worlds feel alive has been the driving passion behind game AI’s evolution.